When you type something on the keyboard, or upload a file, how does the computer know what to display? Thats what the character encoding. The text on the computer really is not composed of letters, is a series of pairs of alphanumeric values. The character encoding acts as a key to interpreting these values correspond to which characters, so much as the spelling indicates which sounds correspond to which letters. The Morse code is a kind of character encoding. It indicates how long and short groups of units (such as beeps or light) should be interpreted to form characters. In Morse code, the characters are only in English letters and numbers. There are many character encodings for computers that translate letters, numbers, accent marks, punctuation marks, international symbols, and so on.

There are many different character encodings, and there are many valid reasons for this. Which character encoding to use depends on what you need us. If you communicate in Russian, it makes sense to use a character encoding that supports well the Cyrillic alphabet. If you communicate in Korean, it will serve well to represent a Hangul and Hanja. If you are a mathematician, I want one that has all the mathematical and scientific symbols, as well as Greek and Latin glyphs. And, if you want all these documents can be viewed by anyone, youll want an encoding that is fairly common and easily accessible.

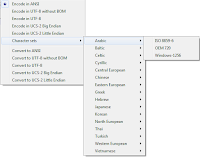

- Unicode - encoding standard that aims to universality. It now comprises 93 different scripts organized into blocks, with many others in the works. The Unicode works differently than the other set of characters in that, instead of hard-coding a glyph, each value is directed to a "code points". These are the hexadecimal values that correspond to the same characters, but the glyphs are provided independently by the programs, such as web browsers. These code points are commonly depicted as follows: U +0040 (which translates into "@"). Under the specific encodings are UTF-8 Unicode and UTF-16. The UTF-8 tries to maintain maximum compatibility with ASCII. It is 8 bits, but it allows all the characters through a mechanism of replacement and multiple pairs of values carattere.L UTF-16 waiver of the perfect compatibility with ASCII for a more complete compatibility with the standard 16-bit.

- ASCII - The American Standard Code for Information Interchange is one of the oldest character encodings. It was originally designed on the basis of the telegraphic code and has evolved over time to include more symbols and some non-printing control characters are now obsolete. It is probably the basic coding that you can have in modern systems, as it has a limited Latin alphabet without accents. The 7-bit encoding allows only 128 characters, which is why there are several unofficial variants in use throughout the world.

- ISO-8859 -The group of the most widely used character encodings of the International Organization for Standardization is the number 8859. Each specification is designated by a code number, often preceded by a descriptive abbreviation, such as ISO 8859-3 (Latin-3), ISO 8859-6 (Latin / Arabic). It is a superset of ASCII, which means that the first 128 values are the same as the encoding of ASCII. However, it is an 8-bit code, and then allows 256 characters and includes a much wider array of characters, each with specialized encoding of a different set of criteria. The Latin-1 includes many accented letters and symbols, but was recently replaced with a revised set called Latin-9, which includes updated glyphs such as the Euro symbol.

- ISO-10646 - this is not an actual encoding is only one set of Unicode characters that has been standardized by ISO. It is especially important because it is the repertoire of characters used by HTML. It lacks some of the more advanced features that allow the comparison provided dallUnicode and writing from right to left as well as from left to right. However it works very well for use on the Internet because it allows the use of a wide variety of scripts and allows the browser to interpret the glyphs. This makes it a little easier localization.

|

| Sampel ASCII Table |

However, there are drawbacks in the use of each set. ASCII is limited in its marks, it does not work very well for text typographically correct. Often you copy / paste from Word just to have some strange combination of glyphs? This is the drawback of ISO-8859, or more correctly, its interoperability with the alleged code page specific to the operating system (Im talking about you Microsoft!). The main disadvantage dellUTF-8 is the lack of adequate support in editing and publishing by applications. Another problem is that browsers often do not correctly interpret and display the order of bytes in a UTF-8 encoded character. This results in unwanted display of glyphs. And of course, claim to use a coding and use the characters of another without declaring / referenced correctly on a web page makes it very difficult for browsers display the pages correctly and for the search engines index them properly.

For their own documents, manuscripts and so on, you can use whatever it takes to get the job correctly. As for the web, however, it seems that most people agree on how to use a version of UTF-8, which does not use the byte order mark (byte-order mark), but not unanimously accepted. As you can see, each character encoding has its own use, context, strengths and weaknesses.

0 komentar:

Posting Komentar